Web Scraping: Costa Rica real estate

Web scraping is one of the tasks I find that I enjoy more every time I do it. Every time it’s a little different, with new challenges and puzzles.

I also really love Costa Rica. I’ve traveled extensively, from Baghdad to Kenya, and Brazil to Amsterdam - but Costa Rica always stands out.

Costa Rica also happens to be one of the few countries where it’s easy to buy land as foreign national, and I’ve been actively considering

investing there in a short-stay rental property. So I decided it was time to get serious, and serious means data.

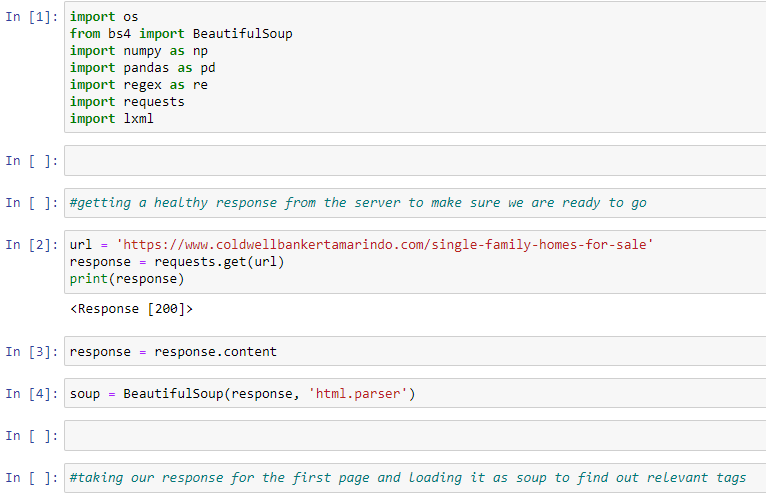

I decided to use Python for this project because it’s flexible, robust and open. There’s a lot of fantastic web scraping programs with slick user interfaces I’ve had my eye on, like Bright Data, but ultimately Python is the workhorse I know and love.

I decided to use BeautifulSoup originally because it requires more coding ability, which I’ve been seeking to develop through my scraping. I’ve had to push through several walls using BeautifulSoup, Hulk style, and I think I’m better for it.

I began by loading my libraries, getting my response code and cooking up my soup from the content - html.parser in hand.

Next I went to the site and found the tags that held the information I wanted: city/province, #bedrooms, #baths, property size and price.

These were all within some pretty funky tags, but weren’t too hard to find in the html.

Once they were picked out I wanted to see what the data we got from each page looked like, so I put them in their own lists using soup.find_all, one of the many handy features of BeautifulSoup.

In order to do the actual magic of scraping, I wrote a for loop. I used the range I knew from looking at the website to deal with the pagination, incrementing the url by one every time as a string (since url’s are strings they don’t mesh with int/floats).

The loop grabbed each instance of the funky class on the page and the data contained within, then appended a new list created to hold the data.

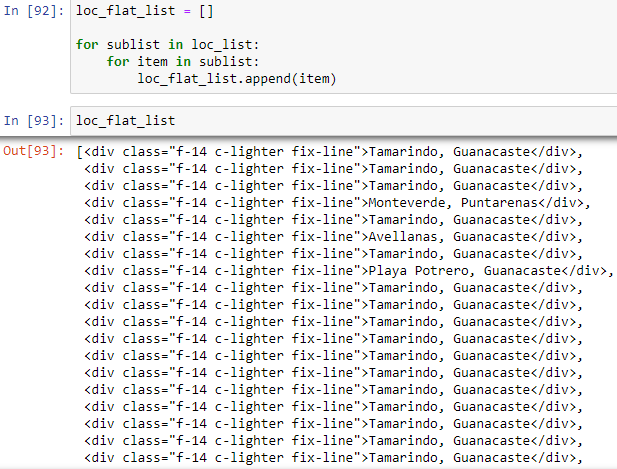

However, this resulted in a list of lists, which you can see from the highlighted brackets. Each sublist being a single page in the sequence. If we tried to create a data frame out of this (and I did!) we’d find that each ciry/province would form its own column, not stack neatly in rows.

But the solution was pretty simple.

Another for loop.

A new loc(ation) flat list was created, and the for loop ran through our

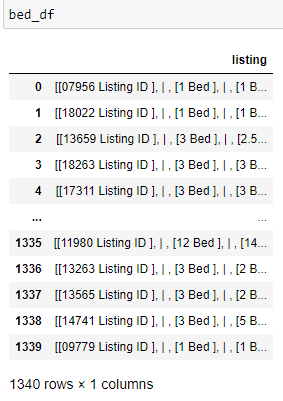

list of lists and appended the data to another .. list!This process was repeated for each of the tags containing relevant data, producing three very html cluttered lists. Luckily, Pandas does a pretty good job of dealing with the mess of tags on its own when we convert the lists to data frames.

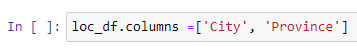

Once the data was put into dataframes it needed to be made as granular as possible; having city and province smashed into the same column just wouldn’t do.

Using the str.split command the columns could be split apart, using the convenient comma inherited from the lists as a delimiter. It wouldn’t be so simple going forward though, and the html mess the data inherited would turn out to be a real problem for the next data list.

And here’s where things get hard:

At first glance I thought, “no problem, I’ll regex this right into form”.

I was, however, very wrong.Try as I might I just couldn’t get regex to remove those troublesome brackets and bars. I’ll admit, while I’m competent with regex I’m no whiz - yet; my ability to see how powerful it can be is surpassed only by the vertigo I feel looking at some crazy regex lines that seem more like cuneiform than code.

I am, however, close to a whiz in excel.

Rather than hunt for hours on stack overflow stubbornly trying to stick to one tool, to prove to myself (and let’s be honest again: you) I could do it, I did what I learned to do when I was a mechanic:When one tool isn’t working alone, bring in another to assist the first.

Within a couple minutes with the find and replace tool in excel, the data was clean and ready to be brought back in, as it had been brought out, as a csv file.

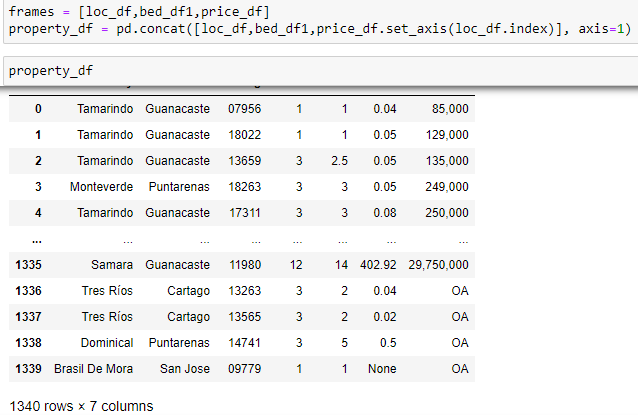

With the dataframes all cleaned up it was as simple as concatenating them all into once nice clean file - or almost.

I forgot about the commas in the price column, which would mess with our next step!All it took was a quick snippet of code to remove them though.

Likewise, I had some weird values in the tail of my dataframe, like None and OA, which were exceptions to the general data and made these rows pretty useless being so out of step with the data, so they were dropped.

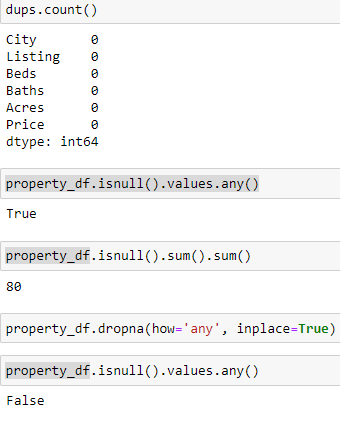

With the final cleaning pass the dataframe was checked for duplicates, nulls were dropped and data types were properly assigned now that the int and float columns were free of non numeric characters.

And here we go.

A clean, proper data set pulled from the html sloppy soup of a web scraper ready to be uploaded to my personal sql server and Power BI!

An afternoon well spent (and evening if you include this blog!)

As a bonus, for reading through this whole blog here’s a couple pictures from my last trip to Costa Rica. Maybe you’ll see why I’m looking for property there, and if you are, I have a dataset that can help!